I’ve spent loads of time this week fascinated by what truly separates us from the machines we construct. We’ve given them eyes by high-res cameras, ears by delicate microphones, and even a “mind” by LLMs. However till now, robots have been basically numb. They may decide up a glass of water, however they couldn’t really feel if it was slipping or how chilly the condensation was.

That modified for me at CES 2026. Whereas everybody was crowded across the flashy humanoid demos, I spent a while with an organization referred to as Guaranteeing Know-how. They’ve unveiled one thing that I consider is the “lacking hyperlink” in robotics: an artificial pores and skin that truly mimics human contact.

Why “Feeling” is the Closing Frontier

Give it some thought—as people, we don’t simply “see” the world; we really feel our means by it. Whenever you seize a ripe peach, your mind receives immediate suggestions about its softness and texture so that you don’t crush it. Robots, regardless of their superior AI, have traditionally struggled with this. They depend on visible knowledge and torque sensors, which is like attempting to carry out surgical procedure whereas sporting thick oven mitts.

I’ve all the time felt that for a robotic to really combine into our houses—to assist with the dishes or look after the aged—it wants to grasp stress, texture, and phone. Guaranteeing Know-how’s new “Digital Pores and skin” (e-skin) goals to resolve precisely that.

Tacta: The Fingertip Revolution

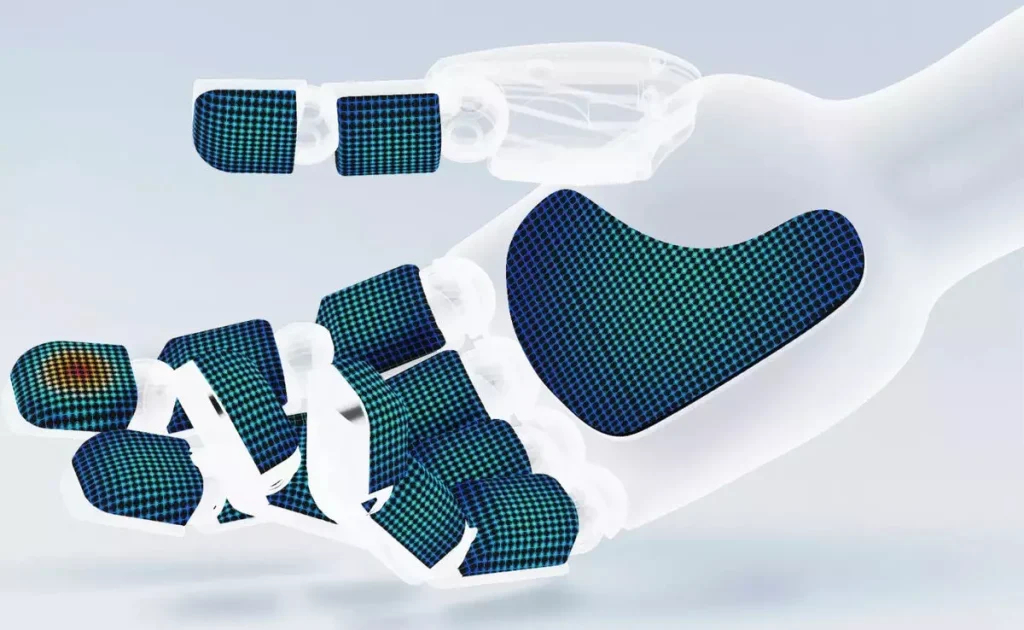

The primary product they confirmed me was Tacta. This can be a multi-dimensional tactile sensor particularly designed for robotic palms and fingertips.

I dug into the specs, and actually, the precision is staggering. Here’s what makes Tacta totally different from something I’ve seen earlier than:

Sensing Density: It options 361 sensing parts per sq. centimeter. To place that in perspective, that’s roughly equal to the sensitivity of a human fingertip.Excessive-Velocity Sampling: The info is sampled at 1000 Hz. This implies the robotic isn’t simply “feeling” as soon as; it’s getting a steady stream of information, permitting it to react to a slip in lower than a millisecond.Edge Computing: Regardless of being solely 4.5 mm thick, the sensor contains the sensing layer, knowledge processing, and edge computing in a single module. No cumbersome exterior processors wanted.

Watching a robotic hand geared up with Tacta decide up a grape with out bruising it, after which instantly change to holding a heavy steel device, was a “wow” second for me. It’s not nearly energy anymore; it’s about finesse.

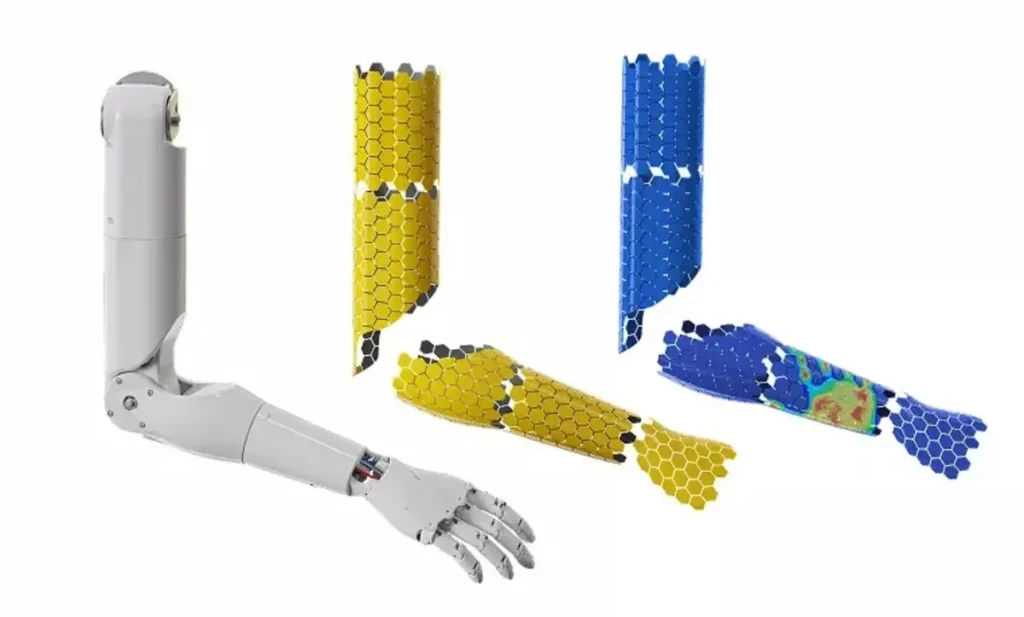

HexSkin: Wrapping the Humanoid Kind

Whereas Tacta handles the fantastic motor expertise, HexSkin is designed for the remainder of the physique. I’ve all the time questioned how we’d make robots secure to be round. If a 150-kg steel humanoid bumps into you, it must know instantly that it has made contact.

HexSkin makes use of a superb hexagonal, tile-like design. This modular strategy permits the pores and skin to be wrapped round complicated, curved surfaces—like a robotic’s forearm, chest, or legs.

Scalability: Due to the hexagonal grid, it could actually cowl giant areas with out shedding sensitivity.Collision Consciousness: This permits the robotic to have “whole-body” consciousness. If somebody faucets a robotic on the shoulder, it doesn’t must see them to know they’re there.

Why This Issues for the Metaverse and Past

You is likely to be questioning why a “Metaverse” model is protecting bodily robotic pores and skin. For me, the reply is easy: the road between the digital and bodily is blurring. We’re shifting towards a world of Telepresence.

Think about sporting a haptic swimsuit within the Metaverse whereas controlling a robotic in the actual world. If that robotic has Tacta pores and skin, you might theoretically “really feel” the feel of a cloth or the warmth of a cup of espresso from 1000’s of miles away. That is the {hardware} that can finally bridge that hole.

I additionally take into consideration the protection facet. I’ve been a bit skeptical about “house robots” due to the bodily threat. However a robotic that may really feel a toddler’s hand or a pet’s tail in its path is a robotic I’d truly belief in my front room.

My Take: The Uncanny Valley of Contact

There’s one thing barely eerie a couple of robotic having “human-like” contact. We’ve spent so lengthy taking a look at robots as chilly, exhausting machines. Giving them pores and skin—even when it’s artificial—makes them really feel rather more “alive.”

As I watched the Guaranteeing Know-how demo, I spotted we’re quickly closing the hole. With Nvidia’s Rubin structure offering the processing energy and Guaranteeing’s e-skin offering the sensory enter, the “Terminator” or “I, Robotic” future is trying much less like fiction and extra like a scheduled product launch.

Wrapping Up

The breakthrough from Guaranteeing Know-how proves that the following stage of AI isn’t nearly higher algorithms; it’s about higher embodiment. We’re shifting from AI that thinks to AI that feels.

I’m curious what you consider this. If we give robots the power to really feel ache or delicate textures, does that change the way you view them? Would you are feeling extra snug having a robotic in your house when you knew it had a “human-like” sense of contact, or does that make them a little bit too lifelike to your liking?

Let me know your ideas—I’m genuinely curious if this crosses a line for you or if it’s the improve you’ve been ready for.